- H.A.I.R - AI in HR

- Posts

- Updates, events and opportunities

Updates, events and opportunities

Personal news, some must-attend online events and an opportunity for some...

Hello H.A.I.R. Community,

Firstly, some big. No… huge news from me this week.

I’ve joined Warden AI as Head of Responsible AI and Industry Engagement.

Having sat in almost every seat in this industry - from the recruitment desk at Reed, to running workforce solutions for the public sector, to the GTM and product teams of HR Tech vendors, to advising global leaders on AI governance - I’ve seen this market from every angle.

For years, the focus was purely on innovation: "What can this tech do?"

But recently, the conversation has shifted. Now, it is: "Can we trust it?"

And very recent industry headlines have made one thing clear: The "Wild West" of AI is over. The era of Assurance has begun.

That is why I am joining Warden.

Warden isn't just another tech platform. It is the infrastructure of trust for the HR industry. 2025 was the breakout year for the team: evolving from a single use-case startup to working with 40+ leading HR Tech vendors to set the standard of AI assurance for HR.

My mission is to match that momentum: To turn "Responsible AI" from a vague buzzword into a measurable, defensible standard.

At Warden, we are building the Trust Infrastructure that enables:

• Vendors to sell their innovation with confidence.

• Staffing Firms to deploy AI at scale without fear of regulatory blowback.

• Employers to hire efficiently while proving their systems are compliant and safe.

• Candidates to trust that they are being treated fairly.

If evidencing trust in AI hiring is a priority for you or your organization in 2026, I’d love to hear how you’re approaching it. Feel free to drop me a message.

I’m excited to get to work with Jeffrey Pole and the team.

And for my first act in this role…

I didn't expect the definition of "Trust" to be tested quite this fast after joining Warden.

The Eightfold AI class action last week has fundamentally widened the scope of HR Tech compliance. For years, we have rightly focused on Algorithmic Bias, ensuring our tools don't discriminate. That work remains critical.

But this lawsuit adds a second, equally urgent front to the battlefield: Consumer Reporting Law (FCRA).

To have a defensible strategy, we must solve for both.

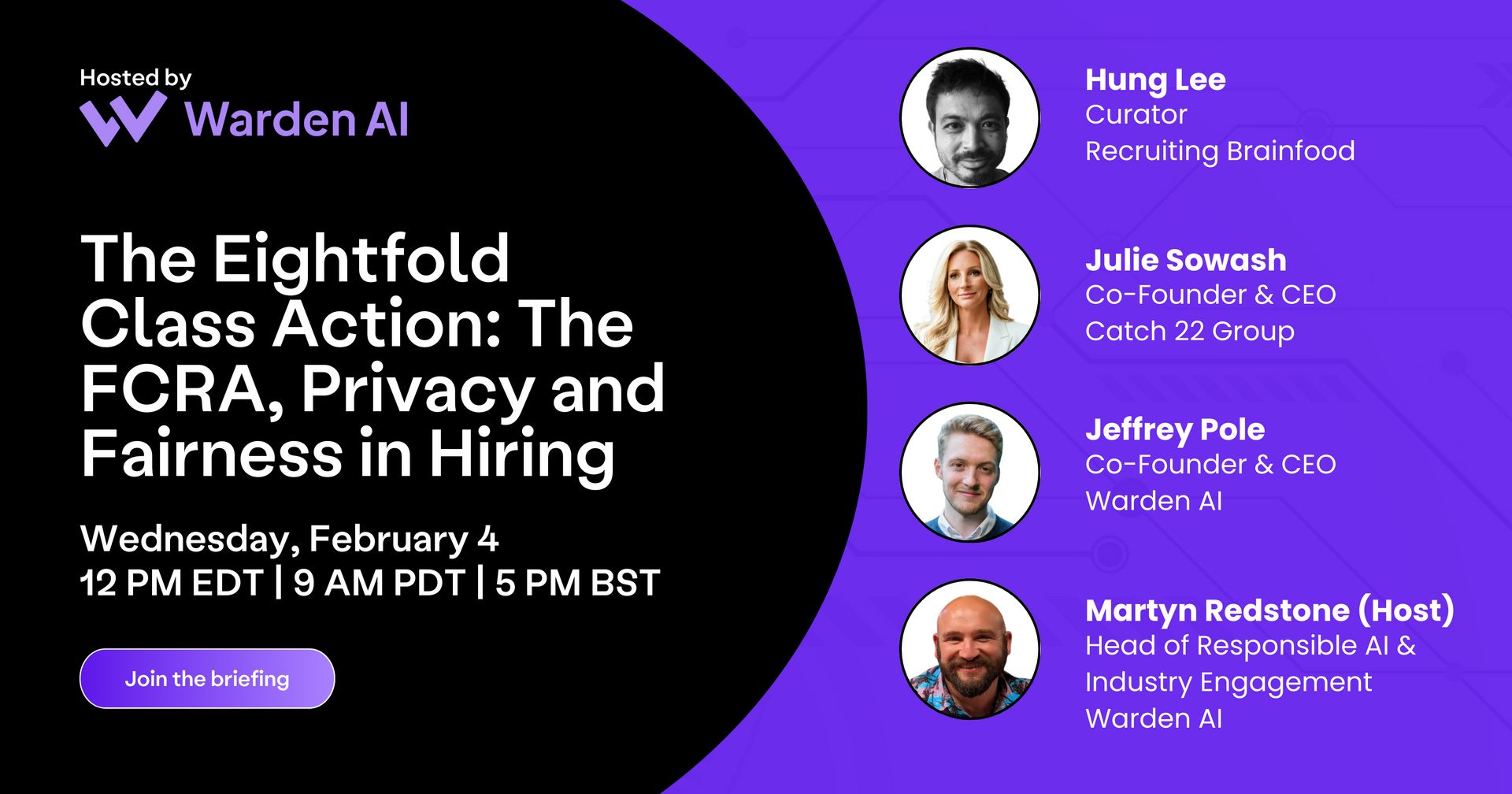

I’m hosting a briefing to discuss how we navigate this expanded landscape. I’ll be joined by:

Hung Lee (Curator, Recruiting Brainfood)

Julie Sowash (CEO, Catch 22 Group)

Jeffrey Pole (CEO, Warden AI)

We will deconstruct the TalentBin precedent, the "Consumer Report" trap, and the governance for this new layer of risk.

Wednesday, Feb 4th

12pm EST | 9am PST | 5pm GMT

What Responsible AI Means for Recruiters

Recently, I was asked if I hold the record for mentioned in the Hung Lee’s Recruiting Brainfood Newsletter (definitely subscribe if you haven’t already).

Well, apparently, he can’t get enough of me, so he’s invited me onto his webinar this week all on the subject of Responsible AI,

This webinar explores the reality behind “responsible AI” in talent acquisition, cutting through vague principles and focusing on what recruiters actually need to know. We’ll examine why so many organisations still lack formal AI governance, why confidence in bias reduction remains low, and what that means for teams deploying AI at scale. Drawing on 2024–2025 data and real-world TA use cases, the discussion will unpack the tension between automation, efficiency, and human accountability.

Key areas covered include:

• What responsible AI really means in a TA context

• Governance frameworks recruiters should understand—even if legal owns them

• Bias, fairness, and explainability in screening and assessment tools

• Legal and regulatory risk, including emerging obligations under the EU AI Act and employment law

• The role of recruiters as AI operators, not just end users

• How to balance speed, cost savings, and candidate trust

• What “human-in-the-loop” looks like in practice

Listeners will learn how to evaluate their current AI stack, ask better questions of vendors, reduce risk exposure, and build hiring processes that are efficient, defensible, and fair.

Finally, Bot Jobs is for sale

I want to share something important because this community understands the recruitment and talent space better than anyone.

After over five years of building Bot Jobs, I’ve decided to put the platform up for sale.

Bot Jobs started in 2020 because I couldn’t find a dedicated place to discover roles in Conversational AI. Since then, it’s grown into a focused job board with a global audience, consistent traffic, strong engagement, and thousands of applications from people actively working in this space.

At this point, Bot Jobs doesn’t need reinventing — it needs an owner (or group of owners) who wants to take a proven platform and turn on the commercial levers. The foundations are already there: audience, SEO, newsletter, workflow, and a clear niche.

I’m sharing this here because:

Some of you may be interested in acquiring Bot Jobs outright

Some of you may want to partner with others and acquire it together

Some of you may know someone who would be a great fit

This isn’t a fire sale and it’s not speculative — it’s a real, operating platform with real usage, looking for the right next owner.

If this sparks your interest, the next step is simply a conversation. No pressure, no pitch deck theatrics — just a chance to explore whether it makes sense.

You can reply directly to this newsletter or message me privately on LinkedIn, and I’ll happily share more details.

Until next time,

Reply